On Tracking Data, the Nature of Soccer, and Allocation

Don't take event data at its word

In this one, I want to talk about soccer analytics, its bright but contested future, and what in my opinion is an urgent shift in mindset needed to prevent the foreclosure of this exciting horizon.

But let’s just talk about soccer for a minute cuz soccer analytics is about generating insights based on measurements of soccer that we can capture. And, soccer is hard as hell.

What is soccer?

Bear with me. The objective of any soccer team is to create shots near your opponent’s goal (and not concede shots near your own goal). Broadly speaking, this involves controlling the ball in your opponent’s penalty area (not always, but it’s a good rule of thumb). Controlling the ball as a team in your opponent’s penalty area involves moving the ball into the penalty area successfully and this is hard to do (and therefore uncommon). If you’re trying to tell whether a team is good or not, we know for sure, that basing your assessment on some sort of analysis of the above (analyzing the output of a team - whether they’re creating chances) is a good idea. You could just look at the output of goal scoring, but goals are so rare and we exist within time so you rarely have enough sample size of past goals at any realistic point in time to make relevant and timely judgments today about future goals related to whatever you’re trying to figure out. Anyhow…

Because successfully moving the ball into your opponent’s penalty area is hard, and because your opponent is trying to stop you from doing this, most of soccer is actually not moving the ball successfully into your opponent’s penalty area. It’s mostly just moving lots of things around outside of the penalty area (you, your teammates, the ball, the other team’s players) in order to hopefully create an opportunity to move the ball into the penalty area some time in the near future. You could say most of soccer is a constant struggle of two teams constantly trying to build the capacity with which they might successfully enter the penalty area with the ball before the other team does. You don’t need to measure all of this building capacity stuff (which again is most of what happens in a game) in order to tell whether a team is good or not — you just need a large enough sample size of the outputs - the stuff we talked about in that first paragraph, but if you want to know how or why a team is good (i.e. how is it that they’re able to consistently move the ball into their opponent’s penalty area or why they suck at doing that), you do need to measure this other stuff — all the stuff that’s not moving the ball into your opponent’s penalty area but the building capacity to move the ball into your opponent’s penalty area.

Data and modeling “what” vs “how”

For some time now, we’ve been able to measure the relevant outputs themselves really well with event data (detailed records of everything that happens on the ball). Event data can tell us really quickly how many shots per game a team is creating in the penalty area. Further, if we want to put that into goal units (and you know we do here), expected goals based on shot events (and other context within the event data) accomplishes this well. If you want a slightly bigger sample size, event data can also tell you directly about the rate at which a team successfully creates box entries — carries or passes into the box, and with a little modelling, “probability added” models (“goals added” or “expected possession value” models) can measure this same activity in terms of goal units (just like xG) albeit (also just like xG) missing plenty of direct off-ball contextual information and using possession characteristics as proxies for this missing information to measure the goal probabilities before and after each action to the best of their abilities. So, again, if you’re only interested in whether a team is good or not, event data (and models based on event data) do the trick quite nicely. Pick your poison: xG, xA, EPV/g+: when you’re using these, you’re mostly just measuring outputs and picking up enough signal to know “which” teams are good, and "which” teams aren’t.

But forget about the outputs. They are boring. Those other questions, the “how?” or the “why?,” (“how does a team create the outputs they do?”, or “why is it that they’re good?”) are questions you should care about, because they are the core of the game, the vast majority of play where two teams are trying to create the capacity to create the outputs while constantly succeeding and failing at varying rates and in various ways. If you’re trying to figure out how to build a team that can create good outputs, then you need to be measuring the how because how includes individual players contributing to a collective team effort which is variously due both to various individuals and to other team dynamics on a spectrum or scale of said outputs. And if you’re a front office trying to build a winning team, you want to be able to measure an individual player’s contributions in this "how” area. The outputs aren’t enough.

The “how” is a difficult question, and there’s much we can discuss there, but something that we know is that if we’re going to tackle this question with data, event data just isn’t going to cut it. The noblest efforts to date using event data are definitely the goals added / expected possession value type models, which we’ve talked about before. The thought is, if the “how” comprises individual possession sequences of a team trying to move lots of stuff around (themselves, the ball, their opponents) and each possession sequence comprises individual actions on the ball and our data is organized into possession sequences and individual events (actions), then we can throw a decade plus of soccer event data organized into these same units and have the machine learning spit out the probability of scoring a goal during the possession before and after each action we’re measuring, allowing us to “value” the actions in goal units, with the most common next step of measuring a player’s contribution based on his participation in these valued actions.

But, we know from our description of soccer above that the “how” involves moving not just the ball, but your players, and trying to move your opponent’s players in order to create the capacity to move the ball into the box. The event data models can only guess at all this important stuff happening off the ball (where so much of the “how” is happening) because the event data is only organized into rows of events occurring on the ball. Conversely “Tracking Data” when combined with event data captures the location and movement vectors in time of all 22 players and the ball 25 frames per second. If what event data is missing is the “off ball” movements which we suspect tell us a whole lot about “how” teams build capacity to create dangerous box entries, then obviously Tracking Data would improve the accuracy of these individual goal probability measurements before after each data event. Sometimes, you’ll hear the benefit of tracking data summarized as the addition of “off-ball context” to the data and models we already have.

And this is an accurate, if limited statement (and not what this post is about). If you’re going to figure out the “how” and you’re going to do it by measuring the values of actions with data, you need this off ball context for more accurate measurements. Sure. But there’s is another fundamental truth about the game and about measuring the game that must be accounted for and which is only revealed by reflecting on the condition of needing this tracking data to more accurately measure the events. Simply put, it’s because soccer isn’t a series of discreet on-ball events, even if the record keeping is organized this way. It’s continuous interdependence.

We’ll walk through a soccer example which I think clearly illustrates how - yea sure -tracking data improves the accuracy of the event measurements, but more crucially in doing so asks bigger questions about why we’re even still thinking about the game this way at all.

The example

Consider a vertical pass from the center back to the center forward in the final third but not inside the penalty area, without allowing yourself to fill in any other additional context. This is actually quite hard to do without cheating. Your brain wants to at the very least determine whether he’s being played through or whether he’s dropping off the back line, whether the ball is lofted or on the ground, whether he’s in a pocket of space or has a defender on his back, and models powered by event data can only guess at the average of all of these possible situations based on its location and a few other variables.

Let’s say now that you can see clearly there’s a defender on the forward’s back and he’s receiving the ball on the ground facing away from goal, and there’s even another spare defender in the area who’s ready to swoop in and challenge the center forward for the ball. Based on that information, we probably already know more about the likelihood of the pass being completed than we would have previously, but for the rest of the exercise let’s go beyond that and assume that the pass is completed. This additional context about where the nearest defenders are, without any other context would tell us that the value of that pass might be lower than the average from our fully context-blind imagination of the completed pass (the event data only model). The presence of immediate defensive pressure suggests possession may very well not be retained here, despite a successful pass that moved the ball closer to the goal. The goal probability as the pass is completed to the forward is lower than it would be without this context because losing possession hurts your goal scoring chances.

Now, let’s say that right as the ball is being played to the center forward by the center back, a teammate has moved inside (from the wing), or up (from deeper midfield) and is making himself available to the center forward for an easy lay-off pass, and he’s free to receive this pass without immediate pressure. Well, now with this additional context we can imagine a substantively higher goal probability within the possession at the culmination of the forward pass from the center back to the center forward. The likelihood of the ball being retained (and therefore the possession continuing) has increased, which is an overall boost to any goal probability, but especially in an area of the field where the center forward is operating.

But even more context would change our estimation of the goal probability again. If the center forward successfully receives the pass and he has this easy layoff option available to him, that’s great - better than if he did not, but let’s say that the easy layoff is there but there are also 4 defenders behind the ball, the two that challenged him and two on the flanks and the 4 are pinching in a bit such that they comfortably outnumber the 2 players upfield here. The 2 attackers will have reinforcements in a second, but they’re still sprinting upfield to catch up to the play as are other defenders. Sure, the layoff man still isn’t under immediate pressure, so there’s a positive goal probability there from sustained possession in a dangerous area while the wing reinforcements arrive, but this goal probability is certainly greater if we give him some immediate reinforcements.

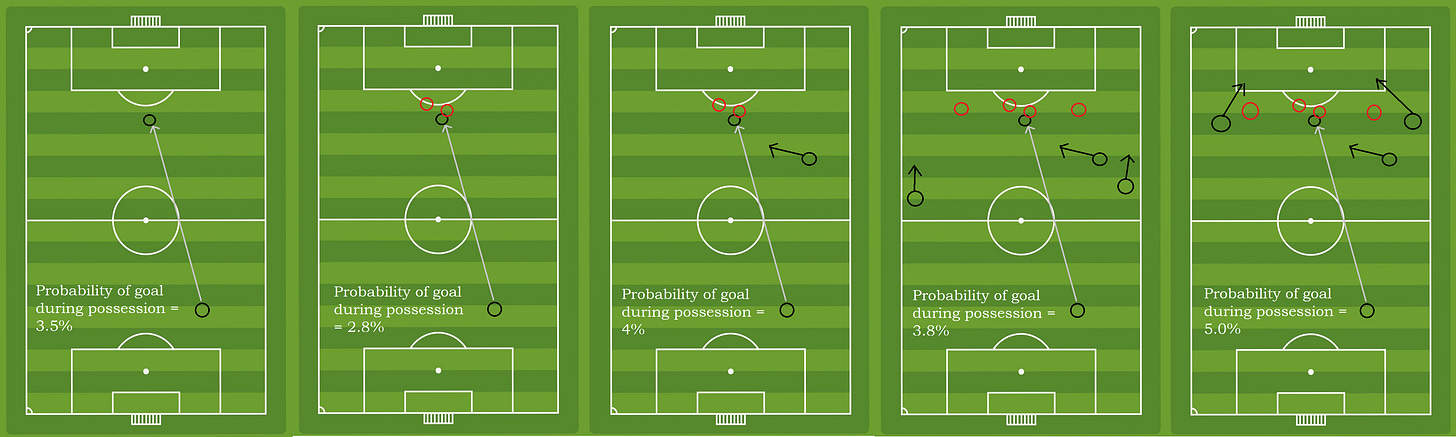

Let’s say now that when the lay-off man shows for the ball, he has an overlapping runner on his right flank, creating space for the runner himself (if the nearest defenders stays compact) or for the layoff player receiving the ball, if the defender tracks the runner, and perhaps there’s even another wide player on the opposite left flank making a similar gut-busting run. Well again, this added context changes the estimated goal probability at the culmination of that first pass upward — not only does the team have sustained possession in a dangerous area, but there are immanent threats or contestations of control to key spaces in the final third and the penalty area itself. To put some fake numbers on it:

So with the benefit of this additional context, we can imagine how the goal probabilities before and after the pass (the difference between these two moments is calculated as the “action value” of the pass) might fluctuate within a goals added or EPV model. And we can sort of intuit that more context is better in terms of accurately modelling the goal probabilities before and after the pass.

So, let’s say we have perfect, omniscient god-level tracking data now, fully aware of all possible context and all 22 players at every moment, and let’s say we have the computing power to model it all to generate “perfect” goal probabilities before and after the pass. If we want to use these values to measure individual player contributions (a typical use case of g+), then where we go from here is the question of this post, and if you’re following along it’s a more complex question than we’d previously imagined.

Attribution vs Allocation

I mean, the sort of “consensus” next step you see commonly is to take that action value tagged to the pass event and just directly attribute it to the guy who made the pass. After all, the unit of account we’re measuring is the event, and the event “belongs” to the passer in the raw data. Makes sense I guess. Or you might see the pass value allocated between the passer and the receiver somehow (like American Soccer Analysis does it with g+). But our above walkthrough should really challenge the whole question itself.

We were able to get a more accurate value of that “pass event” but to do it we had to acknowledge the obvious fundamental truth of soccer- that it’s a game of collective action. With the benefit of the additional “context,” the change in goal probabilities from before the center back passed to after the center forward received was the result of contributions from many players involved: the center back passing, the center forward creating a glimpse of space to receive, the midfielder showing for the layoff, the wing players appearing in time to really threaten the defense. The presence of the defenders (where and how many) and their reactions during the pass also impacted the probability of scoring. One team is making coordinated movements to create space and the other team abstractly structuring themselves to constrict it. We could literally see those differences in the measurements as the context was added or taken away, so to use the “context” for increasing measurement accuracy and then ignore it for determining player contributions and just assign the value of the pass to the passer only (or to the passer and the receiver only) is a bad plan. The data might be organized in rows of events tagged to individual players on a 1 for 1 basis, but we don’t have to take the structure of the old data records at its word.

As we move forward into a future where tracking data is more ubiquitous and powers future goals added models, let’s not be stuck in the old mindset of “rows of events tagged to individual players” when we know (and can even see it in the tracking data) that’s not how it actually works.

We cannot pretend to attribute various events specifically to individuals when the tracking data screams that all 22 players are impacting the two teams’ probabilities of scoring in every moment. As goals added type models begin to measure the goal probabilities in a given moment incorporating all of this “space” (William Spearman’s “Pitch Control” and Javier Fernandez and Luke Born’s “Expected Possession Value”) into the calculations, and in doing so make them more powerful and accurate, we will also necessarily need to think more about “allocation” than “attribution” when it comes to actually taking the more accurate values and mapping them to player contributions, and with that comes an important honesty and transparency that we are making specific choices based on assumptions, rather than directly measuring a player’s contribution (as was the inherent suggestion with EPV models coming out of event data). In reality, it was always allocation amongst a population of players, and it always will be. We just never really questioned it when 100% of the value was being allocated every time to a single individual instead of to multiple contributors. The aesthetics of one to one assignment suggested there was actual individual causality being measured, even if that wasn’t the intention (a model is only a model, etc).

The Point

Soccer is not a game of discreet events, individuated from one another and attached to individual players on a 1 to 1 basis. It is not a game of 1v1 duels, despite what you’ll hear from some coaches and analysts. It is a game of interdependence and of individual feats of excellence nested within relationships between teammates, opponents, space, the ball and an abstract collective charge.

Remember, we decided the “how” of soccer was about the players in possession moving around, moving the ball around, and trying to move the opponent’s players around in order to try to create the capacity to then move the ball successfully into the penalty area for a shot (the “what”). Once you have perfect tracking data (if this ever exists or when it exists - just roll with this), not only can you measure the goal probability at discreet moments earlier in a possession on either side of a given event record more accurately (the “how”), but if you want, you can decide to measure it continuously 25 frames per second rather than discreetly before and after an event. And, not only can you assign the value of a pass to the passer, but you can assign the value of whichever unit you choose to all of the players involved (all 22 players on both sides of the ball with varying weights of responsibility or contribution) since they are clearly impacting these probabilities through the way they change the “context” in the tracking data. You can decide that your unit of account is based on an individual frame within a second, or base it on a time interval itself, or an event (a pass), or perhaps a “play” (a pass, the receipt, and the next pass?) occurring within several seconds, or you can use the unit of the entire team possession itself (several actions and all the moments in between). And then you can decide how to allocate the value of whichever unit you’ve just chosen based on whatever allocation method you decide. And I would argue that you need to do this, and further that this is really hard, and really important. And that some of these choices will bear better fruits in terms of predicting stable “player values” than others. And I’ll point out that I don’t know how to do any of this.

And I think this is sort of my point with this post. Soccer is about abstract coordination of a tactical plan amongst players who all individually have agency and can manifest these ideas through a combination of individual brilliance and collective action. The off-ball players are not just “context,” for a game that is crystalized in the ball and in the on-ball player. The 21 other players operating off the ball “are the game” itself, just as much as the on-ball player is, and they are actively shaping important goal scoring probabilities, probabilities that front offices should use to measure team performance, and the contributions of individual players toward that team performance. All 22 players are trying to build the capacity to to create output.

Soccer is this way whether you organize your data into individual rows and assign those individual rows to individual players or whether you measure the game continuously, taking into account the full “context” occurring off the ball and allocate these context-rich value measurements out to the 22 players in play. Soccer is this way whether or not the television broadcast and video games frame the player on the ball as the protagonist and critique his every move once he gets the ball, never crediting him with having arrived on the ball in the first place. And, it is this way whether or not the first revolution in data analytics culminated with a broad consensus that you could measure a player’s attacking contribution to his team, based on how much he improved his team’s goal scoring probability once he was on the ball while ignoring his off-ball contribution to make himself available to be on the ball. Soccer is this way whether or not the growing adoption of tracking data will illuminate the way it is for the next revolution to unlock deeper insights about the game.

It would be such a shame if in the future, tracking data was simply valued as richer “context” for valuing on-ball moments because we had falsely learned that the game was about what happens on the ball, and we had falsely learned this simply because the event data was organized that way. With tracking, the promise of using data to better understand the full game is on the table, but it won’t bear these fruits, if we don’t uproot the false (and understandably practical) frameworks sown in the past.

Bonus 1: How to allocate?

This is a topic for a later post, or perhaps for the real analytics community to tackle (not me). But when I think about allocating values that have been derived robustly in the data, I am encouraged by the mindset that the community has applied (largely in failure) to defensive statistics over the years. The basic idea has always been like “yea yea, analytics is good in attack cuz you can see who’s on the ball, but in defense you don’t know what sort of opportunities to make defensive plays individual players have, so you have all these problems of “should we possession adjust the stats” or how do we articulate the boundaries of each players responsibilities etc. In short, everyone identified that event data couldn’t capture the essence of defending directly on a row by row basis, and so they had to think really creatively about how we might imagine a perfect defensive metric and try a bunch of shit. I’m incredibly encouraged by the ideas laid out by Aditya Kothari and his Opta presentation on denying space. Blipping each player off the pitch, one by one, and calculating the hypothetical space that opens up in every moment may not map perfectly to an inverse attack, and surely it’s not perfect for defense either (this stuff is hard), but it’s this sort of abstract approach that I think will open doors on the other side of the ball with attacking stuff. I mean, in short, my suggestion here is that what the analytics community rightly identified for years about the inadequacy of event data for defense applies to the other side of the ball as well — I mean to an extent is must. Everything is connected.

FWIW, I would be curious to know how William Spearman, Javier Fernandez, and David Sumpter are thinking about this question of how to turn the values themselves into player contributions.

Bonus 2: Chris Paul and the “Maintainers”

As I was writing this, a video jumped into my twitter feed from Ben Taylor about the NBA and Chris Paul (go Deacs!), and it’s pretty good. There’s a bit in here talking about how Paul’s primary threat as a “creator” of using a double screen and driving and dishing hasn’t change so much from his early days in the NBA until now as a veteran, but what’s changed is that his teammates, the “maintainers” as this video calls them, his wing players are doing a better job positioning themselves in more advantageous positions to receive the ball for a shot as he is driving to the hoop and the defense is collapsing. Namely, the maintainers are behind the three point line instead of just in front of it like they used to be. I thought about how yes, Chris Paul is the “creator” here, moving the ball into dangerous high value areas and then assessing the options in front of him, but the shape of his teammates in relation to him (behind the three point line instead of in front of it) is absolutely impacting the expected point value of the possession as he’s driving toward the basket. These players are not “context” for what Paul is doing, they are actively shaping the outcomes of the team’s possessions.

Bonus 3: Attribution and Allocation in Financial Accounting

Since we try to tie stuff back to corporate finance topics here…

In corporate finance and accounting, where attribution vs allocation comes up the most is when a company with multiple operating segments or business units incurs costs centrally that benefit or drive revenue for multiple of its segments or products. Most companies with multiple operating segments like this, manage them quasi-independently with the segment managers responsible for the results of their individual business. For any given invoice received through the corporate procurement or A/P department, there’s a decision to be made as to whether the expense should be borne by a single operating segment (attribution or assignment), or whether the cost should be allocated using a reasonable and systematic method. The allocation process is often difficult (and contentious) and accountants bristle at the admitted uncertainty of not being able to directly assign the costs to a specific business, and yet direct assignment often improperly reflects the results of a given business, given the shared nature of many of these costs. You can sort of feel this same sentiment in the soccer analytics world, a hesitancy to admit that we just do not know the exact causality of who is contributing on a given sequence of events. After all the data is organized with a specific player tagged - like an invoice with a specific business unit printed on the top. After all the hard work of building detailed expected possession models, powered by supervised machine learning, it just feels a little bit unscientific to allocate the results based on an admittedly judgmental method. And yet, similar to the financial world, the direct assignment of these actions is just not reflective of the underlying reality of how soccer works. My suspicion is that just like in accounting, we’ll get a better result by admitting this and allocating the value of actions or sequences to those involved based on a smart allocation process.

Thanks for sticking around. More to come, but I cannot promise a consistent cadence at this point.